In this notebook, we’ll use the MovieLens 10M dataset and collaborative filtering to create a movie recommendation model.

We’ll use the data from movies.dat and ratings.dat to create embeddings that will help us predict ratings for movies I haven’t watched yet.

Create some personal data

Before I wrote any code to train models, I code-generated a quick UI to rate movies to generate my_ratings.dat, to append to ratings.dat.

There is a bit of code needed to do that.

The nice part is using inline script metadata and uv, we can write (generate) and run the whole tool in a single file.

I’ve started posting more on Bluesky and I noticed that articles from my site didn’t have social image previews 😔

I looked into Poison’s code (the theme this site is based on) and found that it supports social image previews at the site level or in the site’s assets folder.

This approach didn’t quite work for me.

I recently switched to using page bundles which group markdown and content in the same folder and make linking to images from markdown straightforward.

With a few modifications, I was able to make the code work to use images in the page bundles for social previews as well.

I explored how embeddings cluster by visualizing LLM-generated words across different categories.

The visualizations helped build intuition about how these embeddings relate to each other in vector space. Most of the code was generated using Sonnet.

!pip install --upgrade pip

!pip install openai

!pip install matplotlib

!pip install scikit-learn

!pip install pandas

!pip install plotly

!pip install "nbformat>=4.2.0"

We start by setting up functions to call ollama locally to generate embeddings and words for several categories.

The generate_words function occasionally doesn’t adhere to instructions, but the end results are largely unaffected.

Language models are more than chatbots - they’re tools for thought.

The real value lies in using them as intellectual sounding boards to brainstorm, refine and challenge our ideas.

What if you could explore every tangent in a conversation without losing the thread?

What if you could rewind discussions to explore different paths?

Language models make this possible.

This approach unlocks your next level of creativity and productivity.

Context Quality Counts

I’ve found language models useful for iterating on ideas and articulating thoughts. Here’s an example conversation (feel free to skip; this conversation is used in the examples later on):

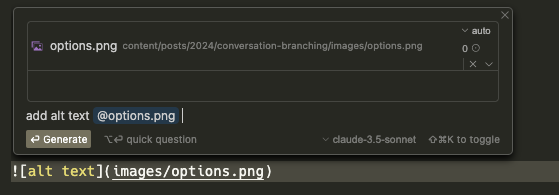

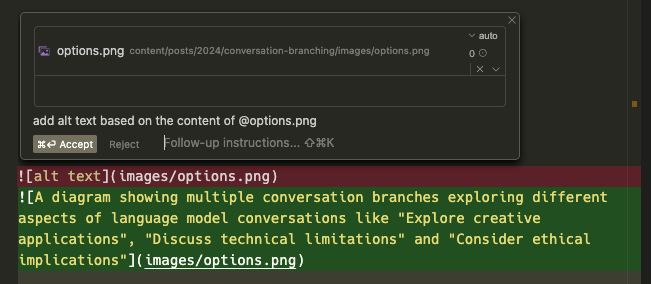

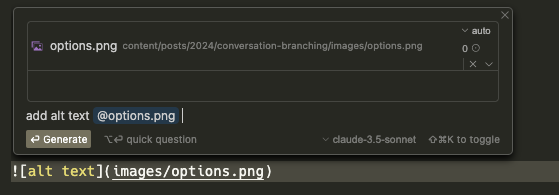

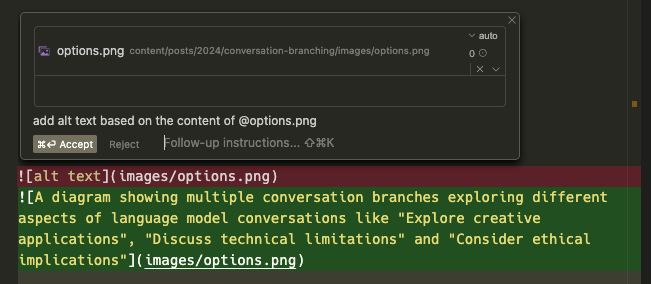

Using Cursor, we can easily get a first pass at creating alt text for an image using a language model.

It’s quite straightforward using a multi-modal model/prompt.

For this example, we’ll use claude-3-5-sonnet-20241022.

Here’s what it generates.

Having completed lesson 5 of the FastAI course, I prompted Claude to give me some good datasets upon which to train a random forest model.

This housing dataset from Kaggle seemed like a nice option, so I decided to give it a try.

I am also going to try something that Jeremy Howard recommended for this notebook/post, which is to not refine or edit my process very much.

I am mostly going to try things out and if they don’t work, I’ll try and write up why and continue, rather than finding a working path and adding commentary at the end.

In this notebook/post, we’re going to be using the markdown content from my blog to try a language model.

From this, we’ll attempt to prompt the model to generate a post for a topic I might write about.

Let’s import fastai and disable warnings since these pollute the notebook a lot when I’m trying to convert these notebooks into posts (I am writing this as a notebook and converting it to a markdown file with this script).

I recently found Joe’s article, We All Know AI Can’t Code, Right?.

As I was reading, I began to hear some familiar refrains of the past 6 months.

Raise your hand if you’ve ever used GitHub or Stack Overflow or any other kind of example code or library or whatever to help you get started on the foundational solution to the business problem that your code needs to solve.

Now, put your hand down if you’ve never once had to spend hours, sometimes days, tweaking and modifying that sample code a million times over to make it work like you need it to work to solve your unique problem.

I had the idea to try and use a language model as a random number generator.

I didn’t expect it to actually work as a uniform random number generator but was curious to see what the distribution of numbers would look like.

My goal was to prompt the model to generate a number between 1 and 100.

I could also vary the temperature to see how that changed the distribution of the numbers.

In this notebook, we train two similar neural nets on the classic Titanic dataset using techniques from fastbook chapter 1 and chapter 4.

The first, we train using mostly PyTorch APIs.

The second, with FastAI APIs.

There are a few cells that output warnings.

I kept those because I wanted to preserve print outs of the models’ accuracy.

The Titanic data set can be downloaded from the link above or with:

!kaggle competitions download -c titanic

To start, we install and import the dependencies we’ll need: