I’ve been chatting with qwen2, a model from Alibaba.

I mostly chatted with it in English but it appears to support several other languages and I noticed a bit of Chinese leaking through even though I don’t speak it, so I’m not sure how I would have introduced it to the conversation.

user: do you have a name

assistant: As an AI, I don’t have personal names or identities like humans do. However, you can call me Assistant to easily refer to me in our conversation.

I was inspired by Daniel’s post to add sidenotes to this blog.

I used claude-3.5-sonnet to generate the CSS and HTML shortcode to do this.

I was impressed how well it turned out.

Now I need to read the CSS in more detail to understand what Claude did

It was almost too easy.

I’m not the most competent CSS writer and I had never written a Hugo shortcode before.

In several turns with Sonnet in Cursor, I was able to create a basic styled shortcode for a sidenote that appeared as a superscript number to start.

I prompted the model to allow me to use content in-line as the shortcode anchor and it generated those modifications.

Then I had an issue with the content overflowing on the right side if the content anchor was too far right or most of the time on mobile, so I asked the model for some options.

It suggested showing the sidenote content below the main post content.

I liked that, but didn’t like how it was shifting the content when it revealed the sidenote, so I prompted it to show the sidenote above the rest of the content.

A nice read by Stuart on Python development tools.

This introduced me to the pyproject.toml configuration file, which is more comprehensive than a requirements file.

It’s something I’ll need to research a bit more before I’m ready to confidently adopt it.

Claude’s character

A video about the personality of the AI, Claude.

I’ve not yet become a big “papers” person yet, so this was my first introduction to “Constitutional AI”, which is a training approach where you use the model to train itself, by having it evaluate its own responses against the principles with which it was trained.

I reproduced Josh’s claude-3.5-sonnet mirror test.

I hadn’t realized gpt-4 and claude-3-opus had also been “passing” this test since back in March.

More interesting still, Sonnet actually seems to resist speaking in the first person about itself.

Fascinating research and evolution of the models’ behaviors.

After reading a bit more, apparently this type of model behavior has been around at least since Bing/Sydney (paywall, sorry).

I spent some time experimenting with OpenDevin using claude-3-opus (I couldn’t find an easy way to use claude-3.5-sonnet).

The agentic capabilities were not bad.

I gave a prompt and behind the scenes, the agent iterated, created files, ran code and course corrected.

I didn’t love that there wasn’t an obvious way to interrupt or help course correct.

My first attempt was with the same prompt I sent to Sonnet to build Tactic.

The result wasn’t bad.

OpenDevin (running in a container, which makes me feel better) setup several files in a project then seemed to install dependencies, iterate on issues and inspect the site running in the browser.

At least, this was what it claimed to be doing.

It was hard to validate what was happening, especially because the tool’s built in browser never loaded anything.

Maybe this was a bug or something unusual happening on the model side.

I weirdly was running into an issue where whenever a ⌘F search didn’t return a result, my screen would flash white.

It was irritating me for several days.

Fortunately, I was able to find a solution that addressed it.

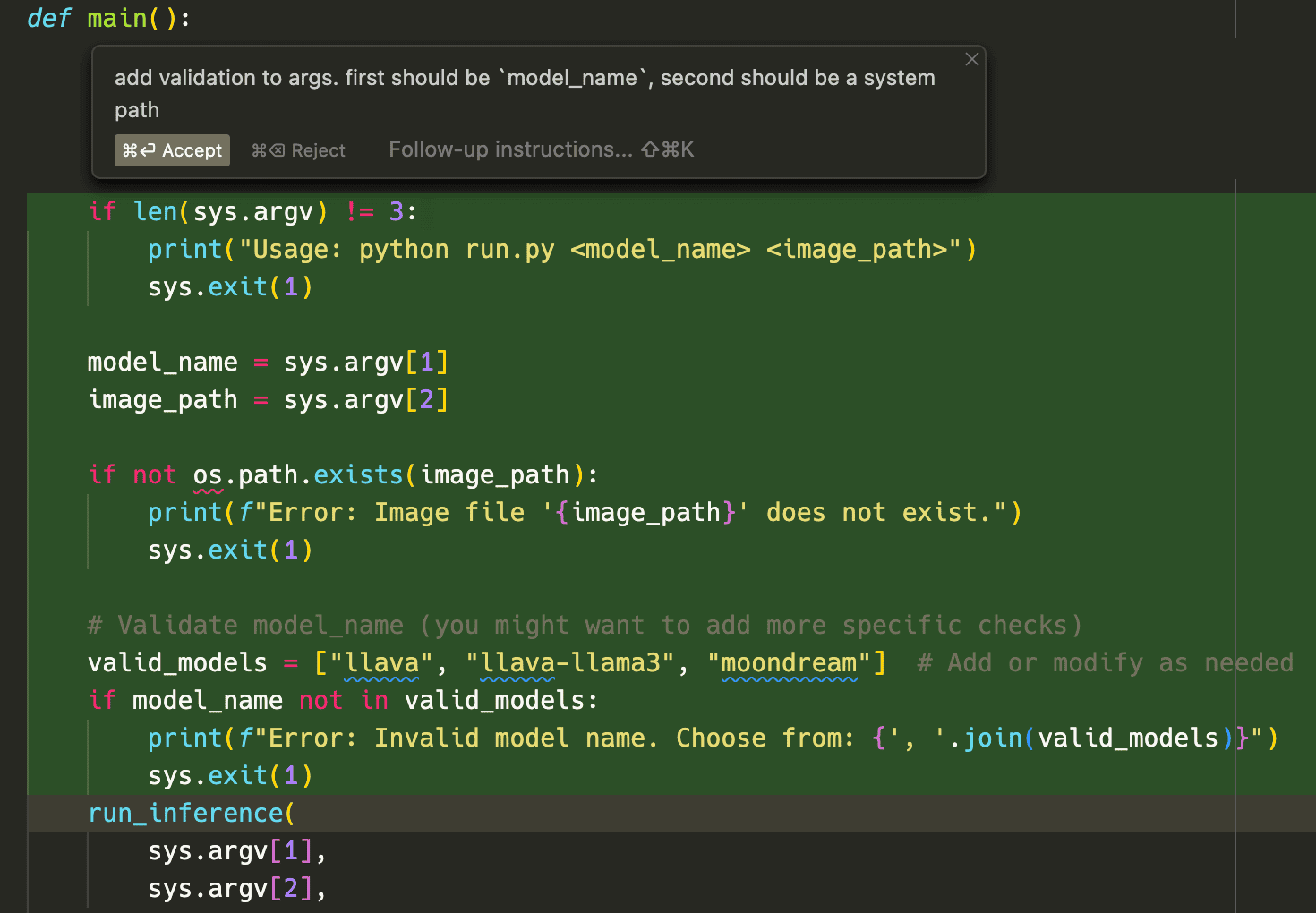

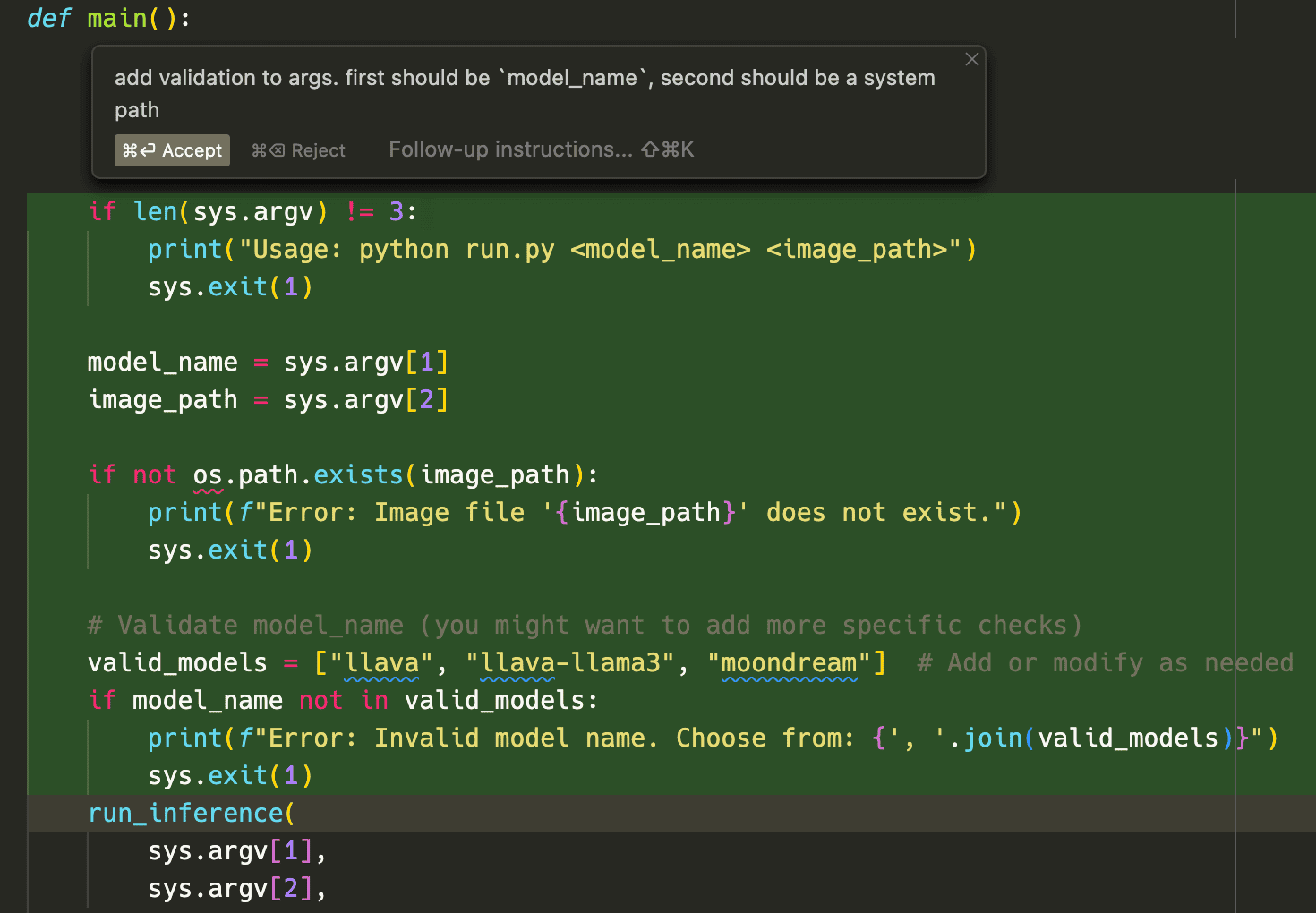

I was writing code with claude-3.5-sonnet and prompted it to add input validation for input arguments.

Most of the code what straight forward and I was expecting the one sentence prompt to get me 5-10 lines of code with path validation, I did not expect these lines.

I’m trying to avoid buying too much into the hype (maybe it’s too late), but here are several folks talking about their notably impressive experiences with claude-3.5-sonnet.

As I noted in this post, I am going to spend more time interacting with smaller models to try and build more intuition for how LLMs behave and the different flavors in which they respond.

Today, I spent some time chatting with Microsoft’s phi-3 3B using ollama.

In chatting with phi3, I found it neutral in tone and to the point.

It responds in a way that is easy to understand and is not overly complex or technical at the onset.

It doesn’t seem to have a strong sense of self.

I enabled Cursor’s Copilot++ today.

Magical.

So much better predictive capabilities than Copilot.

The way it anticipates my needs is pretty cool.

Edit: It’s not great for writing markdown or only prose.

I learned a bit more about Crafter, a Minecraft like game that language models can play.

I enjoyed reading Jordan’s post, a walk down memory lane of his career so far through a series of emails.

He includes things like following up on internship opportunities, negotiating, and meeting people who would change the course of his career.

He inspired me to look back through some old emails as well, both to remember this time and acknowledge how much has changed since then.

I managed to find my original offer letter from Uber in 2016, which brought back many memories that I might write about in longer form sometime in the future.