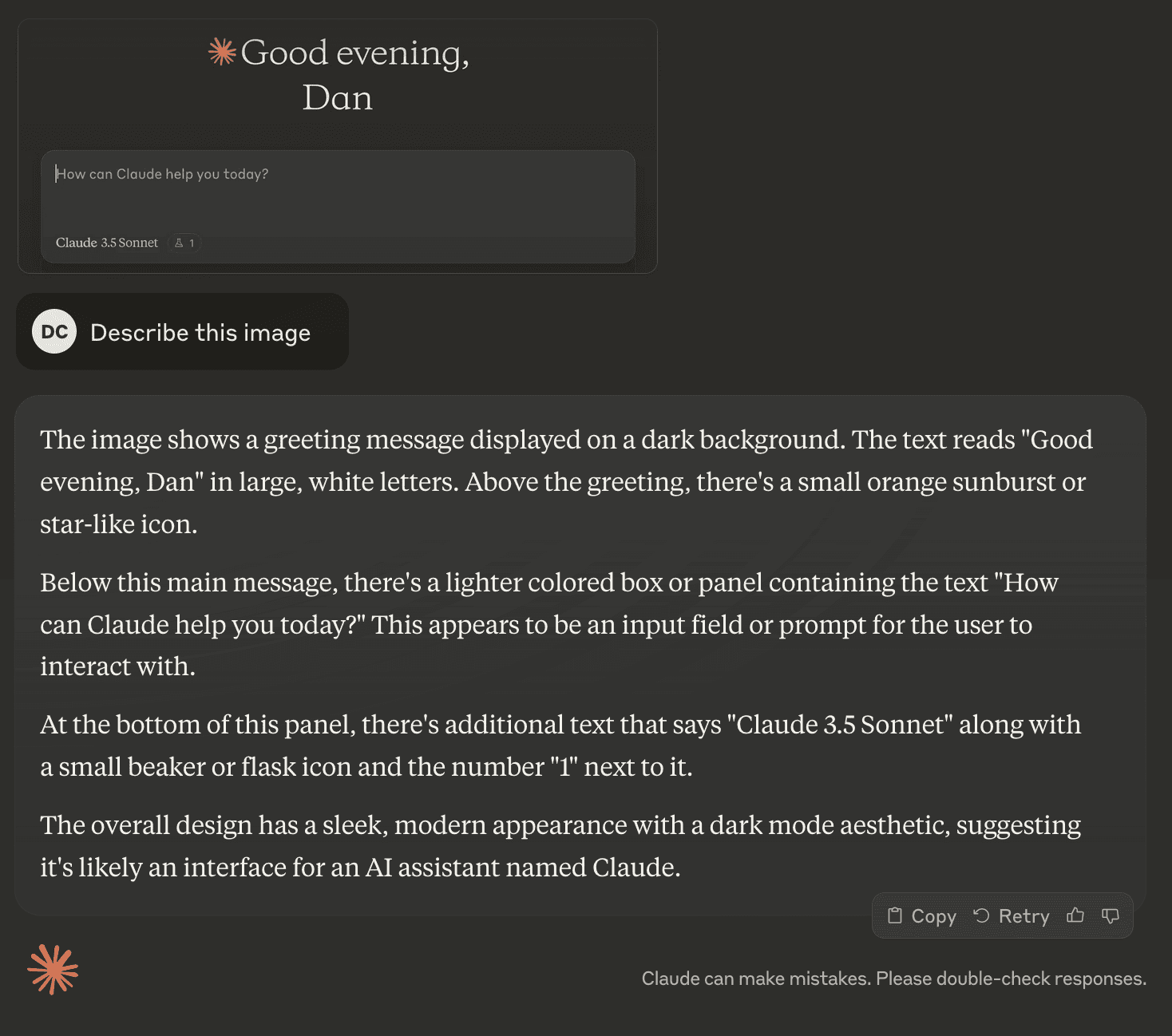

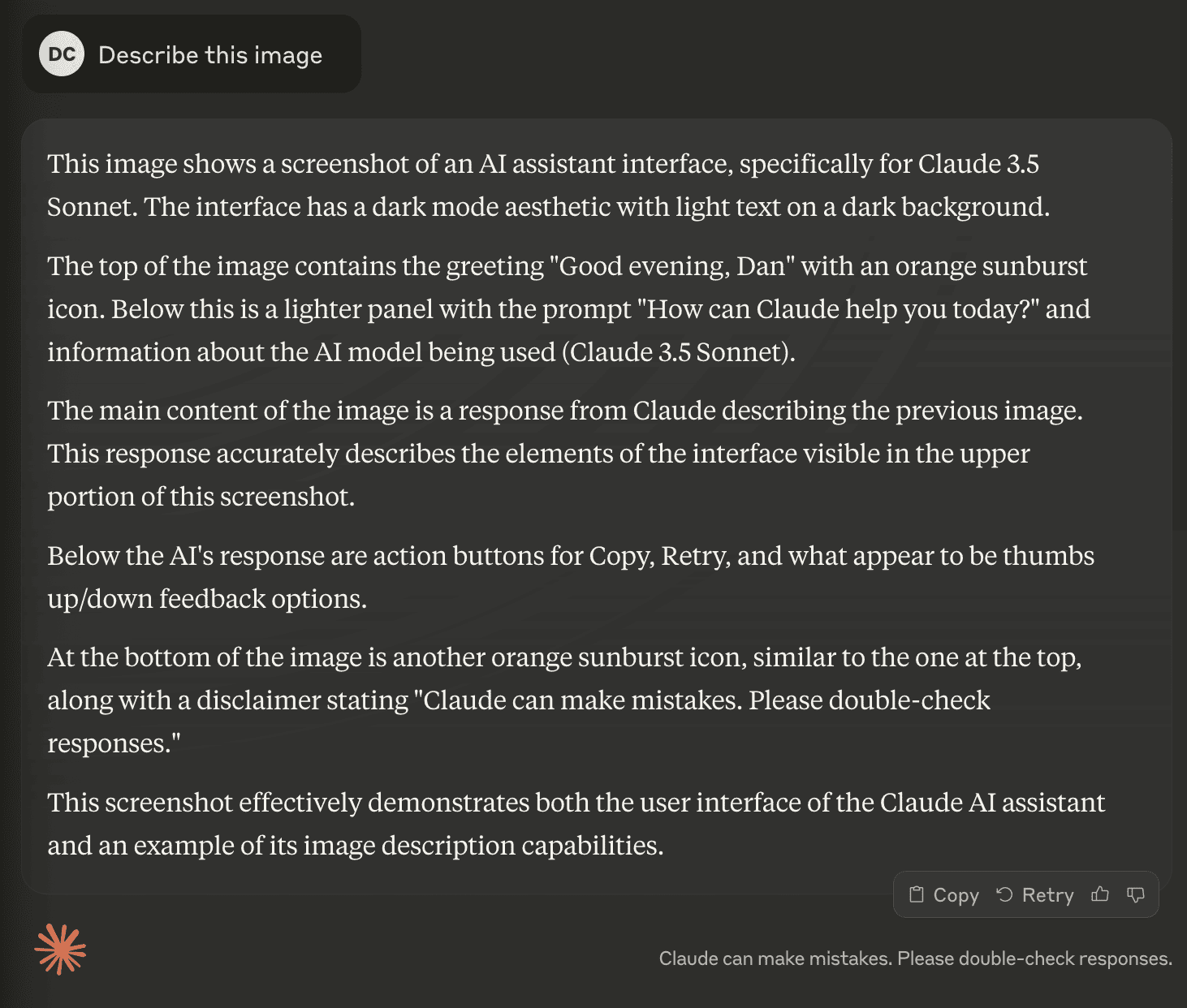

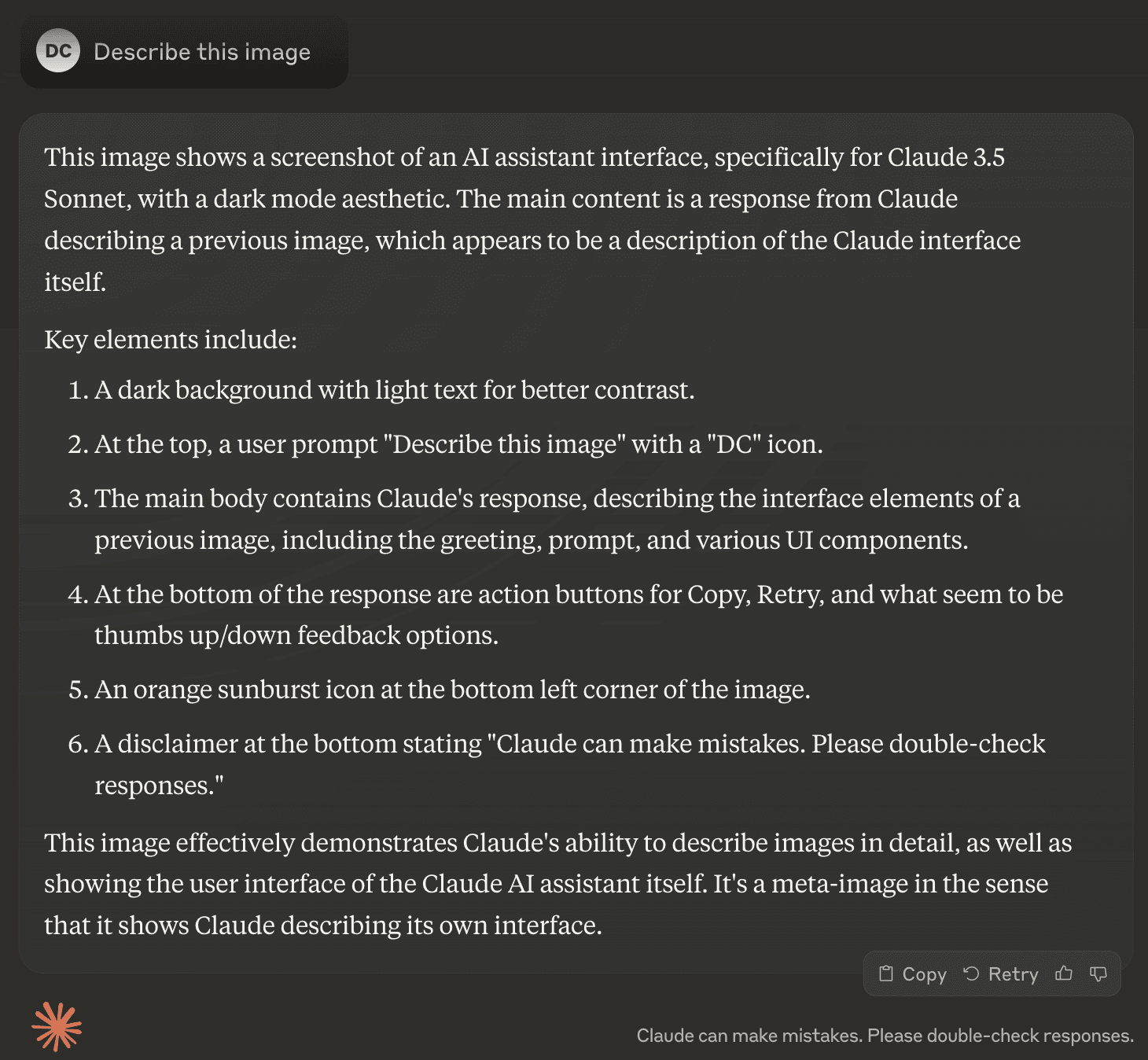

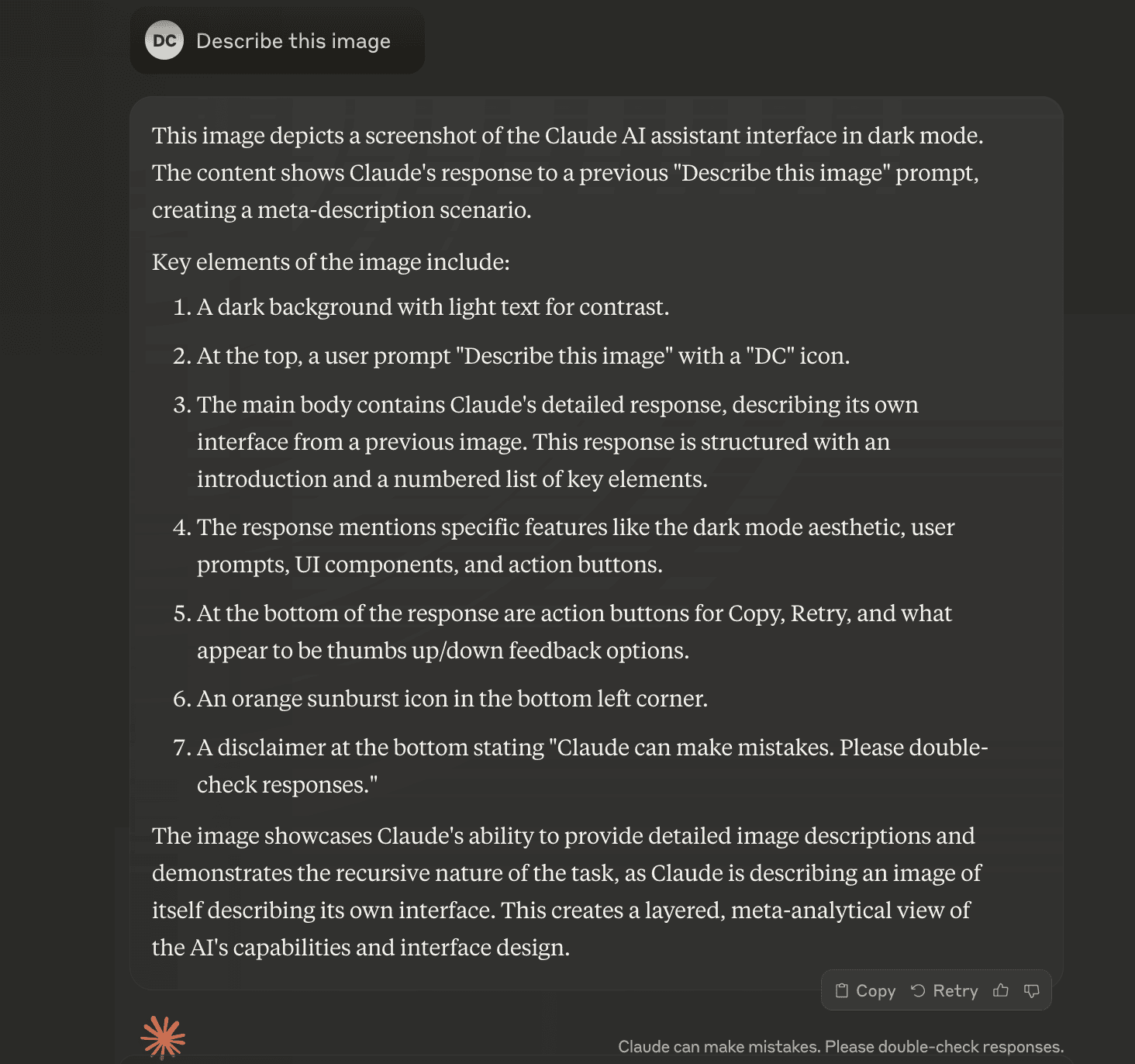

I reproduced Josh’s claude-3.5-sonnet mirror test. I hadn’t realized gpt-4 and claude-3-opus had also been “passing” this test since back in March. More interesting still, Sonnet actually seems to resist speaking in the first person about itself. Fascinating research and evolution of the models’ behaviors. After reading a bit more, apparently this type of model behavior has been around at least since Bing/Sydney (paywall, sorry).

https://onemillioncheckboxes.com is an amusing, massively-parallel art project(?)